"Asking an AI tool is like asking an omniscient friend who possesses all the knowledge and answers directly in a polite, friendly way."

This is how a 25-year-old describes using chatbots instead of traditional search engines in the survey Swedes and the Internet, something one in five people in the survey agree with.

But even though the AI seems foolproof and has all the knowledge in the world, it is still, in a way, stupid.

"It doesn't know what is true or false. It has no understanding of the world of its own and constructs its answers based on probability," says Björn Appelgren at the Internet Foundation.

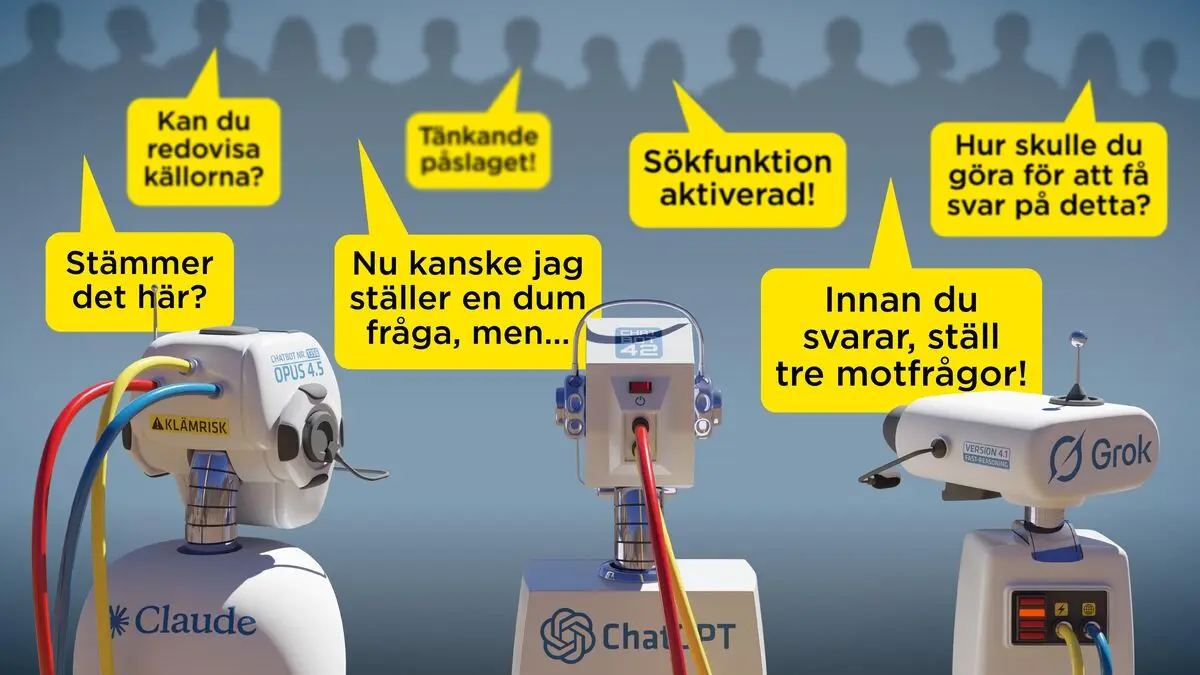

So how can you use AI critically? Here are some tips.

1. Don't trust it blindly.

You can never blindly trust generative AI, says Linda Mannila, visiting professor at Linköping University. The chatbot is not a fact bank or encyclopedia.

It should be remembered that the answers are based on probability and statistics, that it has seen patterns in previous data and, based on that, tries to predict what will be right in a new situation.

2. Ask the chatbot to cite sources

Language models can respond with links to sources. Does the original source seem credible? Also try clicking on the link (because the chatbots can accidentally find links that don't exist).

3. Question: Is this true?

AI enthusiast Anders Bjarby's "super tip" is to ask follow-up questions. One of them is "Is that right?"

When you first submit the question, it will just cough up what it thinks is appropriate. But when you ask the question "Is that right?", it takes into account both the question and the previous answer.

Appelgren is on the same track:

Don't settle for the first answer. Ask the tool to elaborate, reason, or explain again.

4. Turn on the thinking

It is often possible to choose different models in chatbots, where the more "thinking" advanced models generally provide more reliable answers.

Then it will reason and handle some of the "is that right" thinking internally instead, says Bjarby.

5. Turn on search

The model often takes the easy route and uses old training data. If you ask the AI to search the web (sometimes you may need to turn the feature on), it will check the latest information, which is especially important for news events.

6. Ask how, not what

Instead of asking for an answer, you can ask the AI to reason about "How would you go about getting an answer to this?" Then you can decide for yourself whether the method seems reasonable.

7. Ask questions

The answers can be improved if you ask the AI to ask follow-up questions to clarify ambiguities in the prompt. For example, you can ask it to "Before you answer, ask three counter-questions."

8. Dare to be stupid and circumstantial

The chatbot will never get tired of your questions. Feel free to ask stupid questions, and ask it to explain in a different way if you don't understand. The models can process large amounts of text, so write in detail if you want.

Realize that it's just technology. It's not human. You can be lulled into a sense of security where you can discuss using your natural language. But just because it feels more human doesn't mean it's more true or reliable than a Google search, says Mannila.

Gustav Sjöholm/TT

Facts: AI glossary

TT

Agents: Self-governing AI systems that can plan and execute tasks independently to achieve a goal.

AGI: Artificial General Intelligence, a future version where an AI can solve all tasks that a human can.

Chatbot: The actual interface where the user types questions and receives answers. Chatbots, such as Claude, ChatGPT, and Gemini, are usually driven by a language model.

Generative AI: An AI that can create (generate) new material such as code, text, image, audio or video.

Hallucinations: When the AI "makes up" things that aren't true.

Local AI: When a language model is downloaded and run on your own or company's computers instead of in the cloud.

Prompt: The instruction the user gives a language model.

Language model: AI that has been trained on large amounts of text to be able to create text on its own. Also called LLM.

Token: The building blocks that AI divides text into, usually a few characters.

Training data: The enormous amount of text, data, and code that the models are fed.

Vibekoda: Programming where the AI creates the code based on prompts.

+ Four out of ten Swedes used an AI tool last year. The 15–25 age group in particular has embraced the technology.

+ Among adults who are studying, two out of three use AI.

+ Every fifth Swede uses the AI tool for things that could have been Googled.

+ Swedes use AI most for text processing (three out of ten). One in six has generated images, one in 20 code and even fewer sounds.

Source: Swedes and the Internet, Internet Foundation (Novus, January 2025, 3,362 participants)